Automate ELK installation and configuration with Ansible

This post describes how to use Ansible roles I put together to set up ELK (V. 7.x) on a remote server. It will take you through the steps for preparing all needed Ansible roles, playing the playbook, and extra JVM configuration that might be needed for your ELK.

Note: The installation and configuration of the stack are broken into multiple roles to simplify the steps and make it reusable. This is made for Ubuntu Server and executed on Ubuntu Server 18.04 with 4 CPU cores and 8GB of RAM — The specs of the machine are entirely up to the situation and the volume of data.

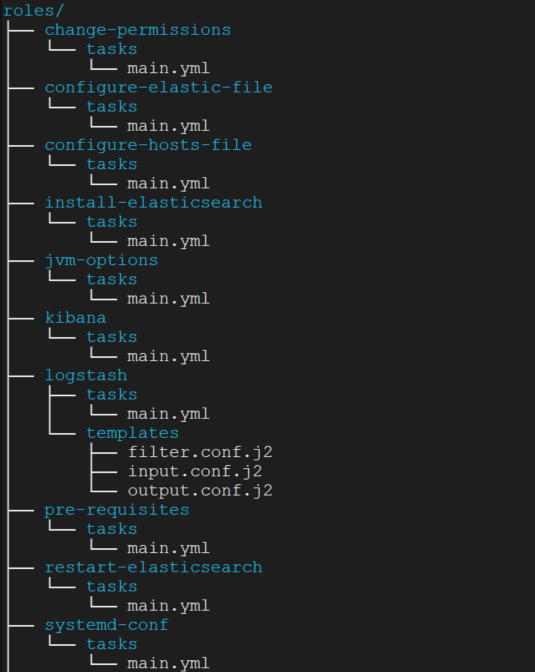

Roles Overview

The roles follow the classic Ansible structure:

1- pre-requisites: For installing needed packages before installing elasticsearch.

- name: Install Packages

apt: name= state=latest update_cache=yes

with_items:

- apt-transport-https

- openssl

- default-jdk2- configure-hosts-file: Update the /etc/hosts in your ELK nodes from ansible inventory. ( Because we will use names in elasticsearch.yml)

---

# group name es-cluster

- name: Update the /etc/hosts file with node name

become: yes

lineinfile:

dest: "/etc/hosts"

regexp: '.*{{ item }}$'

line: "{{ hostvars[item].ansible_host }} {{item}}"

state: present

when: hostvars[item].ansible_host is defined

with_items: "{{groups['es-cluster']}}"3- install-elasticsearch:

The install-elasticsearch role sets up the apt repo, installs elasticsearch. If you would like to change the version of ELK to install, edit the repo URL.

repo: deb https://artifacts.elastic.co/packages/7.x/apt stable main

---

- name: Import the Elastic Key

apt_key:

url: https://artifacts.elastic.co/GPG-KEY-elasticsearch

state: present- name: Adding Elastic APT repository

apt_repository:

repo: deb https://artifacts.elastic.co/packages/7.x/apt stable main

state: present

filename: elastic-7.x.list

update_cache: yes- name: install elasticsearch

apt:

name: elasticsearch

state: present

update_cache: yes- name: reload systemd config

systemd: daemon_reload=yes- name: enable service elasticsearch and ensure it is not masked

systemd:

name: elasticsearch

enabled: yes

masked: no- name: ensure elasticsearch is running

systemd: state=started name=elasticsearch

4- configure-elastic-file: In my case, I prepared an elasticsearch.yml file then copy it to all elastic nodes to have the same configuration in all.

---

- name: Configure elasticsearch.yml File

copy:

src: '{{item.src}}'

dest: /etc/elasticsearch/elasticsearch.yml

owner: root

group: elasticsearch

with_items:

- { src: 'path/to/your/conf/file.yml'}5- change-permissions: Set the ownership and permissions for elasticsearch.

---

- name: set elasticsearch permissions

file:

path: /usr/share/elasticsearch

state: directory

recurse: yes

owner: elasticsearch

group: elasticsearch6- systemd-conf: configure systems settings — Memory, files limit, and elasticsearch.service unit file.

---

- name: Add or modify memlock, both soft and hard, limit for elasticsearch user.

pam_limits:

domain: elasticsearch

limit_type: '-'

limit_item: memlock

value: unlimited

comment: unlimited memory lock for elasticsearch- name: set LimitMEMLOCK to infinity.

lineinfile:

path: /usr/lib/systemd/system/elasticsearch.service

insertafter: 'LimitAS=infinity'

line: 'LimitMEMLOCK=infinity'

state: present- name: set vm.max_map_count to 262144 in sysctl

sysctl: name={{ item.key }} value={{ item.value }}

with_items:

- { key: "vm.max_map_count", value: "262144" }- name: For a permanent setting, update vm.max_map_count in /etc/sysctl.conf

command: sysctl -p /etc/sysctl.conf

7- restart-elasticsearch: finally, restart elasticsearch to take effect of the above changes.

---- name: restart elasticsearch after change configuration by configure-elastic-file role

systemd:

state: restarted

daemon_reload: yes

name: elasticsearch- name: ensure elasticsearch is running

systemd:

state=started

name=elasticsearch

By the above 7 roles, you have your Elasticsearch well installed and configured.

8- jvm-options: This is an additional role if you need to reconfigure the jvm.options file for your elasticsearch. (Default is 1g)

- name: Set min JVM Heap size.

lineinfile:

dest: /etc/elasticsearch/jvm.options

regexp: "^-Xms"

line: "-Xms{{ elasticsearch_jvm_xms }}"

tags:

- config- name: Set max JVM Heap size.

lineinfile:

dest: /etc/elasticsearch/jvm.options

regexp: "^-Xmx"

line: "-Xmx{{ elasticsearch_jvm_xmx }}"

Variables can be passed by

--extra-vars.

9- Kibana: Simply to install, enable, and configure kibana service.

---# Important: If you plan to install Kibana on already installed elasticsearch node, you will start from the third task.

# if not, and you will install Kibana on clean instance, please start from first and second tasks.- name: Import the Elastic Key

apt_key:

url: https://artifacts.elastic.co/GPG-KEY-elasticsearch

state: present- name: Adding Kibana APT repository

apt_repository:

repo: deb https://artifacts.elastic.co/packages/7.x/apt stable main

state: present

filename: kibana-7.x.list

update_cache: yes# Install Kibana

- name: Update repositories cache and install Kibana

apt:

name: kibana

update_cache: yes# Configure kibana.yml

- name: Configure kibana.yml File

copy:

src: /path/to/your/conf/kibana.yml

dest: /etc/kibana/kibana.yml# Enable Kibana service

- name: Enabling Kibana service

systemd:

name: kibana

enabled: yes

daemon_reload: yes# Start Kibana service

- name: Starting Kibana service

systemd:

name: kibana

state: started- name: Ensure Kibana is running

systemd: state=started name=kibana

10- logstash: install, configure logstash.yml, and logstash pipeline.

---

# Important: If you plan to install logstash on already installed elasticsearch node, you will start from the second task. Add Logstash APT repository

# if not, and you will install Logstash on clean instance, please start from first task.- name: Adding APT repository

apt_repository:

repo: deb https://artifacts.elastic.co/packages/7.x/apt stable main

state: present# Installing Logstash

- name: Update repositories cache and install Logstash

apt:

name: logstash

update_cache: yes#--------------------------

## LOGSTASH CONFIGURATION--

#--------------------------# Configure logstash.yml, if you have specific confg to be used.

- name: Configure logstash.yml File

copy:

src: /path/to/your/logstash.yml

dest: /etc/logstash/logstash.yml# Configure Logstash filter.conf config file

- name: Configure Filter

template:

src=filter.conf.j2

dest=/etc/logstash/conf.d/filter.conf

owner=root

group=root

mode=0644# Configure Beats or any (input source) for logstash pipeline

- name: Configure input source

template:

src=input.conf.j2

dest=/etc/logstash/conf.d/input.conf

owner=root

group=root

mode=0644# Configure output source for logstash pipeline

- name: Configure output source

template:

src=output.conf.j2

dest=/etc/logstash/conf.d/output.conf

owner=root

group=root

mode=0644# Enable Logstash service

- name: Enable Logstash service

systemd:

name: logstash

enabled: yes# Start Logstash service

- name: Start Logstash service

systemd:

name: logstash

state: started

daemon_reload: yes

ELK Playbook

Below is the full Ansible playbook that uses all the above roles for setting up the stack.

- name: setup prerequisites

hosts: elk-hosts

become: true

roles:

- pre-requisites

- configure-hosts-file- name: install elasticsearch

hosts: elk-hosts

serial: 1

become: true

roles:

- install-elasticsearch

- name: configure elasticsearch

hosts: elk-hosts

serial: 1

become: true

roles:

- configure-elastic-file

- change-permissions

- systemd-conf

- restart-elasticsearch- name: Install Kibana

hosts: kibana-host

become: true

roles:

- kibana- name: Install logstash

hosts: logstash-host

become: true

roles:

- logstash

Verify the cluster status:

curl -XGET ‘http://localhost:9200/_cluster/state?pretty'I use this playbook to keep up to date with and use, the newest features in Elasticsearch, Logstash, Kibana. you can have the stack installed and working in one command. Elasticsearch has many configuration options that weren’t covered here. It is recommended that you review your configuration later, along with the official documentation, to ensure that your cluster is configured to meet your needs.

Feedbacks are welcome!